Technology has advanced dramatically in recent decades. The iPhone is 1 million times more powerful than all of the computers in NASA headquarters combined in 1985. That is just one example of how fast technology accelerates, and we can expect it to continue to advance at an accelerated rate in the years to come.

According to the law of accelerating returns, more advanced societies advance at faster rates than less advanced societies because they are more advanced. Based on this logic, it is safe to assume that we are on the cusp of an explosion in technology, making this an exciting time to be in medicine.

One of the most promising and influential developments is artificial intelligence (AI). AI has succeeded in performing difficult tasks, such as calculus, financial market strategies, and language translation. However, the technology is not very smart when it comes to capabilities such as vision, motion, and perception—actions that human beings can perform without even thinking. This is the level of AI sophistication we are working toward today.

Types of AI

Overall, there are three types of AI. Artificial narrow intelligence, or weak AI, is AI programmed for a linear function. Weak AI is not new, and many of the technologies we use in our daily lives use this type of AI to perform specific tasks. These include email spam filters, Waze, Siri, Alexa, Amazon, Netflix, Facebook, and Google search.

Artificial general intelligence, or strong AI, is the next step in AI development. With strong AI, a computer would be as smart as a human. It would be able to reason, plan, solve problems, and think abstractly.

Artificial super intelligence goes far beyond our own biological range of intelligence. It is characterized as an intellect that is 1 trillion times smarter than the best human brains in practically every field.

Machine Learning

There are a few ways in which a computer can learn. The first is through supervised learning. For example, if a computer is repeatedly shown images of retinas or dot-and-blot hemorrhages, it will subsequently choose images with similar characteristics based on what it has been shown. This is a very linear process, similar to what occurs with the “recommended” items we see while shopping online or scrolling through Netflix.

The second way machines learn is through unsupervised learning, which is when the computer has the ability to teach itself. Instead of being shown a series of images one after another, the machine is given leeway to make associations based on clusters. The computer’s algorithm interprets clusters and patterns in the data. This is how the iPhone’s facial recognition technology works.

Reinforcement learning is another way that machines learn. This method is comparable to how humans train their dogs: When they do something good, we give them a reward, and when they do something bad, we give them a consequence. This is how computers are programmed to beat humans in chess. They play repeatedly, and every time they make an incorrect move, there is a consequence. Eventually, the computer will cease to make any incorrect moves and will develop the ability to beat its human opponents. IBM’s Deep Blue was the first computer program to beat a reigning world chess champion; some say that was the dawn of AI.

Microsoft Intelligent Network for Eyecare

At Bascom Palmer Eye Institute, we have partnered with Microsoft on a project called the Microsoft Intelligent Network for Eyecare (MINE). This collaboration is designed to bring together top eye care institutions from all over the world and compare data between them. We are using machine learning to find higher-order associations within data—from different geographic, socioeconomic, and ethnic backgrounds—that can help us solve common problems in ophthalmology.

The utility of AI is that it can make associations and integrate data within seconds, whereas it would take humans years to aggregate all that data and to find patterns within them. Whereas research papers may have a total study population of 30 patients, our project will be able to report on 1 billion patients. Imagine the extrapolations that can occur within that dataset. One of the first issues we are analyzing is refractive error progression, or progression of myopia, especially in Asian countries.

Google’s screening platform was one of the first technologies to use deep learning mechanisms. We showed that machine 128,000 pictures of diabetic retinopathy (DR) to allow it to learn what the disease looks like and found that the machine achieved about 87% to 90% sensitivity and 98% specificity.

So, what are the implications, and what problems can we address with AI? We are generating algorithms to identify and treat DR, age-related macular degeneration, glaucoma, retinopathy of prematurity, keratoconus, IOL calculation, and refractive error. There are countless opportunities for use of AI.

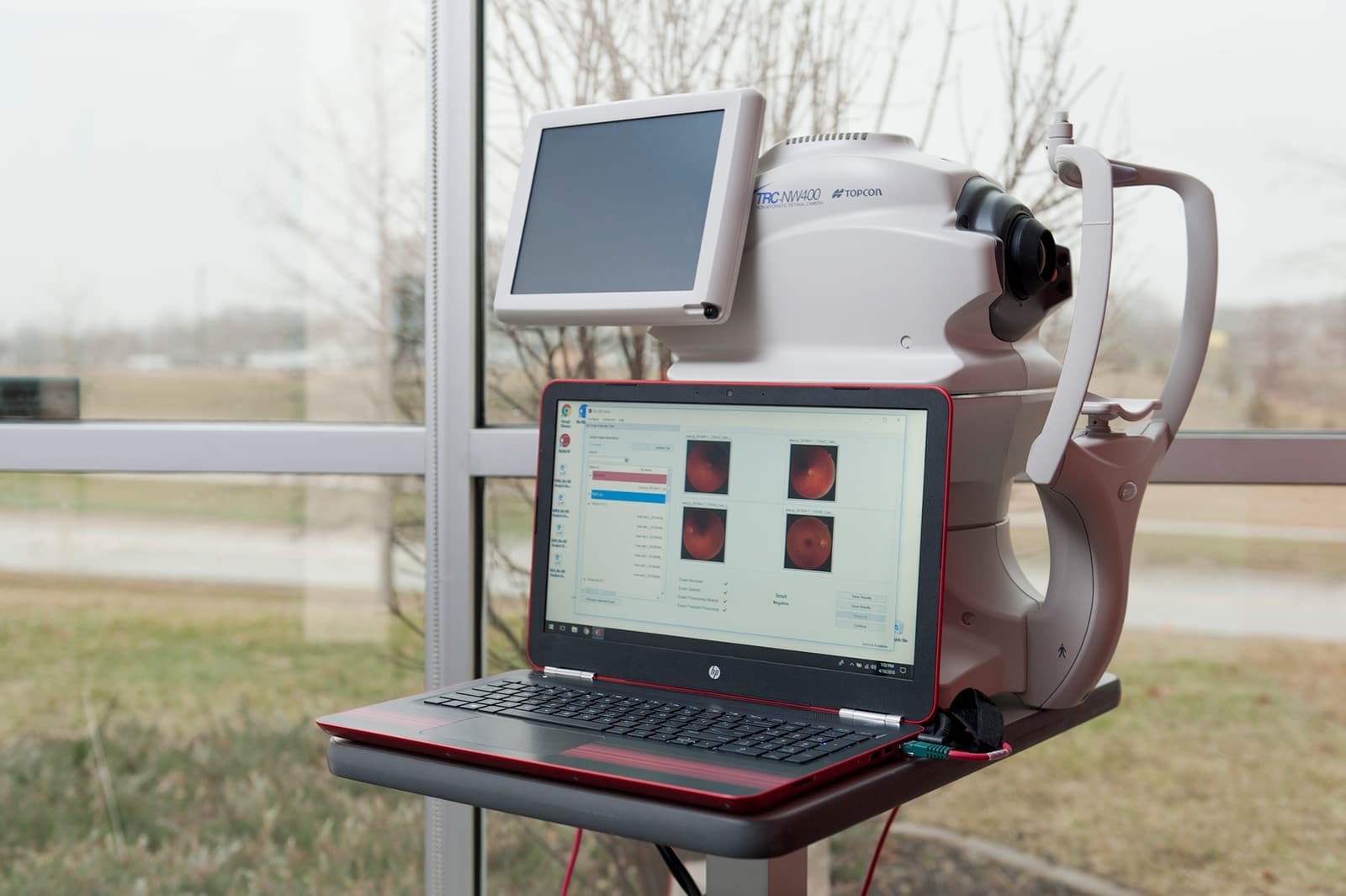

Figure | The IDx-DR camera is the first autonomous AI system. Its algorithm trains the AI to identify diabetic retinopathy from fundus photographs.

Virtual DR Screening

It has been reported that patients with DR may not be screened as frequently as recommended; however, increased use of photography and teleophthalmology may improve screening adherence.1,2 In 2018, the FDA approved the first autonomous AI system, the IDx-DR camera (IDx Technologies; Figure). Its algorithm trains the AI to identify DR from fundus photographs, so a physician is not required to be in the room to help. Although that is somewhat scary, it is very exciting, too.

The University of Iowa is the first institution using this screening approach. Michael Abramoff, MD, PhD, who developed the model, did not stop at training the AI to identify DR from fundus photographs. He wanted to ensure that the device would not take a bad picture, because if the picture is not good, then we are not able to properly diagnose DR. Dr. Abramoff was able to write a second algorithm for the machine to analyze whether a picture is of high enough quality.

DeepMind

One challenge with the IDx machine is that, if it is shown a picture of central retinal vein occlusion, it will try to grade the image as severe DR. This is one area upon which Google is trying to improve. Google’s DeepMind and Moorfields Eye Hospital are working on an AI algorithm that can identify more than 50 eye diseases.

Whereas machine learning is the ability for machines to learn without being explicitly programmed, the next step for DeepMind is deep learning, where the computer can run its own algorithms and train itself through multilayered neural networks. In the next step, with what is known as an unsupervised black box, the algorithm teaches itself and cannot be explained by human observation. Layers of neuronal networks are propagated, like the axons in the human brain, except millions of times faster. This is where real progress but also real problems could arise, including ethical concerns.

Conclusion

The goal of Bascom Palmer’s partnership with Microsoft is to analyze patients in real time. Say you have a patient with glaucoma sitting in front of you. Instead of guessing whether or not to treat, or what the target pressure should be, the computer analyzes the patient’s age, race, family history, IOP, pachymetry, visual fields, and OCTs to determine the real risk of progression over time. Additionally, the algorithm helps us determine how many medications the patient should be taking, what the target IOP should be, when a surgical procedure is indicated, etc. The computer can provide all of this information in real time, within seconds, while the patient is in the exam chair.

AI is not going to replace physicians, but it will allow us to take a highly personalized and efficient approach to medicine. The time we save can be spent counseling patients and truly practicing medicine. AI is a very valuable tool for us to have in our armamentariums, and its potential will only continue to grow.

1. Wang SY, Andrews CA, Gardner TW, Wood M, Singer K, Stein JD. Ophthalmic screening patterns among youths with diabetes enrolled in a large US managed care network. JAMA Ophthalmol. 2017;135(5):432-438.

2. DCCT/EDIC Research Group. Frequency of evidence-based screening for retinopathy in type 1 diabetes. N Engl J Med. 2017;376:1507-1516.